Update: Research into this topic continues. More recent thinking on the Second Law of Thermodynamics can be found here.

The Second Law of Thermodynamics is by far my favorite of the bunch. If I were to be stranded on a desserted island (one preferably laden with blueberry pie and blue velvet cake) with one of them, my first choice would be the Second. It’s the one that describes the tendency of heat to spread out rather than stay in a particular spot. It seems so simple you could almost ignore it for triviality, but it actually has huge physical and philosophical implications (even underlying our perception of time*), and underlies a lot of my worldview. I just realized I’ve never honored it by blogging about it (no, that’s not contradictory, thank you).

A cup of coffee cools down, only because it’s heat tends to spread out. Likewise, a pot of water on a stove will boil and evaporate, only because boiling and evaporating help the heat spread out. The Second Law is always there in the background, giving molecules a little nudge to remind them to “DISPERSE YOUR HEAT, INFERNAL HEATHENS!!”

A cup of coffee cools down, only because it’s heat tends to spread out. Likewise, a pot of water on a stove will boil and evaporate, only because boiling and evaporating help the heat spread out. The Second Law is always there in the background, giving molecules a little nudge to remind them to “DISPERSE YOUR HEAT, INFERNAL HEATHENS!!”

Like the pot of water, the Earth is subject to a constant blast of heat from the Sun. Instead of boiling, atoms on Earth do other things to help dissipate heat. One thing is to randomly form compounds with neighboring atoms, because bonding actually cools the atoms down a little–they release a tiny amount of heat! (Electrons in covalent bonds reduce their kinetic energy, and electrons in ionic bonds reside at a lower energy level.) If this didn’t release heat, they wouldn’t spontaneously form a compound in the first place.

What I find most astounding about this is that if you extrapolate a few billions years of spontaneous bonding in an ever-constant drive to dissipate heat, life itself can originate. This isn’t a new idea and I’m not the first to have thought of it. First, those molecular compounds, subject to random bond formations, are probabilistically bound to form a compound that just happens to have the unique capacity to react with other molecules in the environment. Given heat and proximity to those molecules, these compounds will reconfigure them in different, random ways. One such compound (or a group of cooperating compounds) will stochastically form that reacts with heat and certain other material to reconfigure it into a copy of itself. This first, spontaneously-formed, self-replicating compound (group) will have free reign over the environment to replicate at will without competition.

Once it becomes the norm, the ongoing energy transfer into the system will motivate its random interactions with material in its environment. It will stochastically mutate into variants more efficient at dissipating energy (by growing larger and incorporating more energy-releasing bonds, or being more efficient at self-replication). These variants (possibly polymers or ribozymes) will survive and replicate at the cost of the earlier ones. Such compounds may construct other compounds that effectively aid the replication of the primary compound. Perhaps it constructs compounds that draw in certain raw materials or protect the primary compound from destruction by other reactive compound sets.

That might sound complicated, but it is all driven by the very simple dictum of the Second Law. Given time and chance, such a variation will spontaneously occur. And once it does, it can quickly take over an environment because of its superior fitness. Now the cycle resets at a higher level of complexity, leading to cellular life, organelles, simple organisms, and more complex ones. In each case, random chemical variation can create improvements that will dominate an environment. It may be shocking to consider, but there is no inherent morality here. This is all an inevitable consequence of the tendency for matter to dissipate the energy being blasted upon it. The evolutionary cycle of increasing complexity can even extend beyond biological life, to explain group and societal behavior. Humans that are better able to set aside differences and cooperate to promote their general welfare will out-smart and out-compete those that don’t or that do so less effectively (such as those that didn’t articulate and defend their ideas as attractively as others on Blocvox *wink*).

A entirely separate race, more physically resilient and/or socially advanced, notwithstanding, it is the propagation of the collective meta-entity as defined by it’s memetic make-up that serves as the foundation for another cycle of increasing complexity. Social groups that cooperate in stable partnerships will outlast those that do not. Again, all because of the simple need to dissipate that interminable heat as efficiently as possible. O mighty Second Law, we are here to serve you.**

A paper was published last year that asserts progress along these lines. It would be a monumental breakthrough for the science behind this to be tightened up, and it’s an area I would love to study much deeper at some point (retirement?!). In the meantime, I must remain content with the idea that we exist for no other reason than to more efficiently absorb heat from the Sun. Given the mystery of the “hard problem” of consciousness, I vote that we accomplish this through specialization and cooperation rather than through competition (of course avoiding the trap of cooperating with those only want to compete). The cycle above even explains the tendency of humans on a large scale to be skeptical of each other, as we compete to prove to the universe who’s best at dissipating heat. (Rumor has it, the winning group gets an expenses-paid vacation to Risa *doublewink*.) So it’s obvious what we need to do in order to achieve world peace: extinguish that meddling Sun. While we work on that, I suggest we resign ourselves to absorbing as much energy as possible.

…in the form of donuts.

* If a cool cup of coffee were actually sucking heat out of the room and heating up, you would conclude that you were actually moving backward through time. Well, you would if you had the mind of a theoretical physicist.

** This statement is included for comedic effect only. The Second Law of Thermodynamics is a physical principle and is not intended for use as an anthropomorphic deity. Stunts performed by professionals on a closed course. Batteries not included. Side effects include dry mouth, swelling, dizziness, and tizzyness.

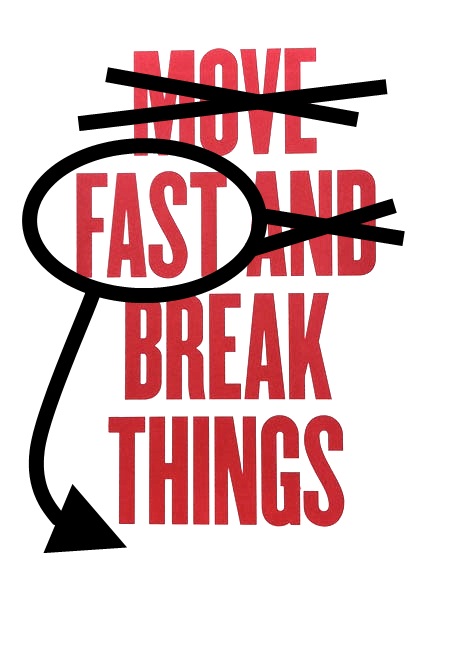

The idea of technical debt is critical to employee work-life balance and to customer relationships. However, many companies these days seem willing to carry that debt, obsessed with a “move fast/break things” culture where any

The idea of technical debt is critical to employee work-life balance and to customer relationships. However, many companies these days seem willing to carry that debt, obsessed with a “move fast/break things” culture where any  So it’s important for the language used to capture the business vulnerability that is associated with difficult-to-change software. While we could adopt a

So it’s important for the language used to capture the business vulnerability that is associated with difficult-to-change software. While we could adopt a  This also means that engineering teams are charged with assessing brittleness and raising brittleness as a concern. Since it’s so intangible, assessing it can be hard. Something that gets the team pretty close is to rank the codebase (or parts of a codebase) on a brittleness scale form 1 to 5. What this assessment really translates to is a gut feel of how comfortable they’d be making changes to that system. Ideally, engineers would feel no hesitation or discomfort to the notion of changing some module. But for time-impacted projects, there will invariably be areas that the team hopes will never need changing, and may exhibit violent involuntary spasms at the thought, due to the disastrous brittleness of the code. Assessing and raising these concerns doesn’t just benefit the business. It protects their own work-life balance, which keeps their average hourly compensation from reducing to peanuts (brittle or not).

This also means that engineering teams are charged with assessing brittleness and raising brittleness as a concern. Since it’s so intangible, assessing it can be hard. Something that gets the team pretty close is to rank the codebase (or parts of a codebase) on a brittleness scale form 1 to 5. What this assessment really translates to is a gut feel of how comfortable they’d be making changes to that system. Ideally, engineers would feel no hesitation or discomfort to the notion of changing some module. But for time-impacted projects, there will invariably be areas that the team hopes will never need changing, and may exhibit violent involuntary spasms at the thought, due to the disastrous brittleness of the code. Assessing and raising these concerns doesn’t just benefit the business. It protects their own work-life balance, which keeps their average hourly compensation from reducing to peanuts (brittle or not).

A cup of coffee cools down, only because it’s heat tends to spread out. Likewise, a pot of water on a stove will boil and evaporate, only because boiling and evaporating help the heat spread out. The Second Law is always there in the background, giving molecules a little nudge to remind them to “DISPERSE YOUR HEAT, INFERNAL HEATHENS!!”

A cup of coffee cools down, only because it’s heat tends to spread out. Likewise, a pot of water on a stove will boil and evaporate, only because boiling and evaporating help the heat spread out. The Second Law is always there in the background, giving molecules a little nudge to remind them to “DISPERSE YOUR HEAT, INFERNAL HEATHENS!!”